Preparation Notes for ISTQB Agile Tester Certification Exam

The mock exam websites mostly contain the same questions in ISTQB board’s websites. Also, they contain some errors in terms of right answers and spelling. I do not advise solving those mock exams and sample questions.

Common Agile Tester examination question topics:

- Agile manifesto and Agile values

- Typical roles of tester in an Agile team

- Planning poker and importance of discussion

- Retrospective meeting

- Risk probability x Risk impact

- Scrum – Kanban – XP comparison

- Test Pyramid

- Exploratory Testing

- Early and Frequent Testing

- Advantages and Frequency of Continuous Integration

- Release Planning vs Iteration Planning

- Build Verification Tests

- Exploratory Testing and Test Charter

- Test Automation in Agile

- Test Independence with Test Teams in Agile

- Black Box Test Techniques

- Agile Testing Quadrants test levels and test types in each quadrant

- Frequency of integration

- Release planning: Test approach and test plan

- V model in traditional and in Agile

- Code quality in Continuous Integration

- Upward trend in burndown chart within iteration

- Integration of development and testing in Agile

- Regression risk and test automation (Automated acceptance tests and automated unit tests)

- Usability tests and test quadrants

- Whole team approach and Early and Frequent Feedback

- Test Pyramid and eliminating defects.

- TDD – ATDD – BDD their focuses and roles of tester in these approaches

- Positive scenarios in ATDD

- Sprint Zero and tester’s role in that sprint

- Testability of Acceptance Criteria

- Quality Characteristics

- Transparency and Visualization in Kanban

- Configuration Management and Configuration Item

- Test Management and Tracking Tools

- Frequency of Continuous Integration

- Difference between release planning and iteration planning

- XP values

- Application Lifecycle Management

- 3C Concept: Card Conversation Confirmation

- Agile is a very fast paced environment and automation helps testers to focus on new tasks.

- BDD allows a developer to focus on testing code, based on the expected behavior of the software.

- The ATDD approach allows every stakeholder to understand how the software component has to behave.

- User stories are written to capture requirements from the perspectives of developers, testers, and business representatives.

- Test Charter provides the test conditions to cover during a time-boxed testing. It guides exploratory testing.

- The role of a tester in Agile Project:

- Collaborate with business representatives to help them create suitable acceptance tests.

- Working with developers to agree on testing strategy.

- Deciding on test automation approaches.

- In Agile projects, the release plan is updated either after each iteration or at worst after every few iterations.

- Agile manages quality: Quality and review steps are built in throughout the project and recurring retrospectives regularly check on the effectiveness of the quality processes.

- Negative tests should come after positive tests in ATDD.

- Updating charts is a task of Scrum Master.

- Unit and integration level tests are automated and are created using API based tools.

- System and acceptance level tests are automated by using GUI based tools.

- In TDD automated test cases guide the code development.

- Specific BDD framework can be used to define acceptance criteria based on given-when-then format.

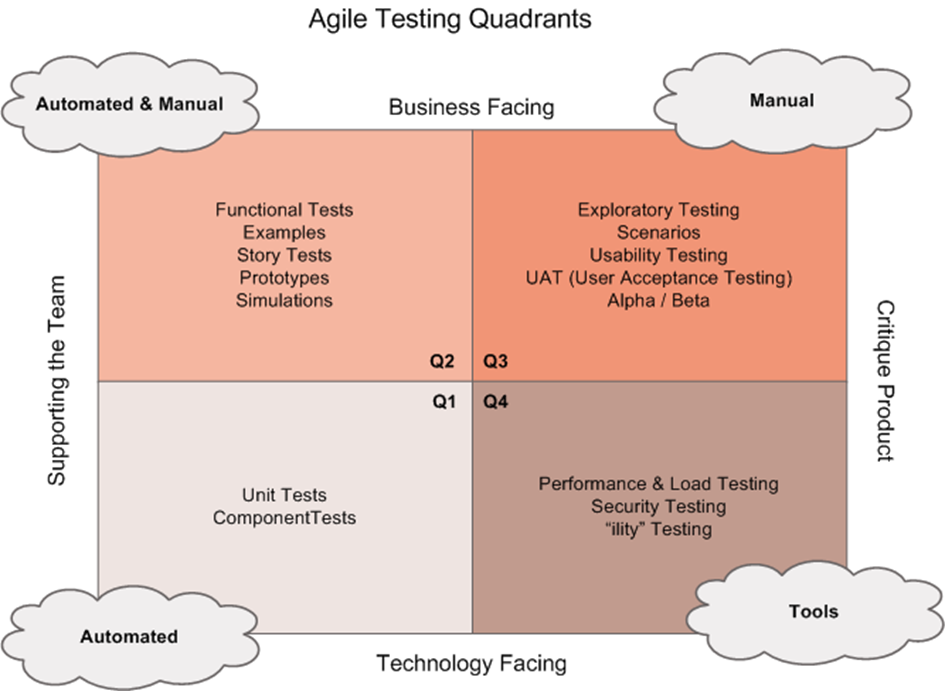

- Quadrant 2 is system level and business facing and confirms product behavior.

- A high estimate usually means that the story is not well understood or should be broken down into multiple smaller stories.

- Retrospectives may also address the testability of the applications, user stories, features, system interfaces, root cause analysis of defects.

- Understand and plan for automating user stories and support various levels of testing is done in iteration planning.

- Feature verification testing, which is often automated, may be done by developers or testers and involves testing against the user story’s acceptance criteria.

- Feature validation testing, which is usually manual and can involve developers, testers and business stakeholders working collaboratively to determine whether the feature is fit for use, to improve visibility of the progress made and to receive real feedback from the business stakeholders.

- During exploratory testing, the results of the most recent tests guide the next tests.

- Integration planning is identifying all dependencies between underlying functions and features.

- Appropriate test techniques are NOT selected based on tester’s skill set.

- Appropriate test techniques are selected to mitigate each risk based on the risk, the level of risk and the relevant quality characteristics.

- Definition of Coverage: All relevant test basis elements of a particular release have been covered by testing. The adequacy of the coverage is determined by what is new or changed, its complexity and size and the associated risks of failure.

- Decision table is the best choice when testing system behavior for different input combinations.

- Exploratory testing is a skill to find possible problems and is an understanding how to determine when a system fails.

- Automated Acceptance tests are run as a part of the continuous integration for full system build regularly. They provide feedback on product quality with respect to the regression since the last build.

- A configuration item is a self-contained unit of a system that is used for identification and change control. Configuration management uses processes and tools, and it controls the configuration items especially for change management. Common configuration types include software, hardware, and documentation. Configuration items help identifying the components of a system.

- An independent separate team may work on developing automated test tools, carrying out non-functional testing, creating, and supporting test environments and data, carrying out test levels that may not fit well within a sprint.

- Static analysis on the code is an example of unit testing.

- Q2 testing quadrant is useful when creating automated regression test suites.

- Q3 testing quadrant uses realistic test scenarios and data.

- TDD tests are automated and used in continuous integration.

- If behavior is mentioned, then the approach is BDD.

- So as to determine performance requirements review similar products, existing documentation and acceptable performance and talk with the business users.

- Functional behavior, quality characteristics, scenarios and constraints are the acceptable criteria for a user story. Skillset and time limits are not acceptance criteria for a user story.

- Sprint zero is the first iteration of the project where many preparation activities take place.

- In Agile projects quality risk analysis takes place at Release Planning and Iteration Planning.

- Test bases: Lessons learned, user profiles, standards, risks, design.

- Application Lifecycle Management records user stories, estimates, and associates development and testing tasks.

- Five XP values are Communication, Simplicity, Feedback, Courage, Respect.

- Code itself cannot be test oracle.

- Individual’s knowledge, user manuals and existing system for benchmark can be test oracle.

- Testers define necessary test levels.

- A product owner is the one who generates, maintains, and prioritizes product backlog.

- Alpha and Beta tests may occur either at the end of each iteration, after the completion of each iteration or after a series of iterations. But not during the iteration.

- A build verification test is an initial subset of automated tests to cover critical system functionality, should be created after a new build is deployed.

- Automated unit tests provide feedback on code and build quality but not product quality.

- Tester is responsible for understanding, implementing, and updating test strategy.

- Product backlog is defined and redefined in Release Planning.

- Power of Three: Testers, Developers, Business Representatives.

- 3C Concept: Card, Conversation and Confirmation for User Stories.

- Test levels are test activities that are logically related, often by the maturity and completeness of the item under test.

- To improve overall product quality, many Agile teams conduct acustomer satisfaction surveys.

- The risk of introducing regression in Agile is high due to extensive code churn (lines of code added, modified, or deleted from one version to another)

- Working code should be delivered at the end of each iteration.

- Test level entry and exit criteria are more closely associated with traditional life cycles.

- Automation is essential so as to “ensure the team maintains or increases its velocity” and “ensure that code changes do not break software build”.

- The result of BDD are test classes used by developers to develop test cases.

- Testing quadrants can be used as an aid to describe the types of tests to all stakeholders.

- ALM tool provides visibility into the current state of application that allows collaboration with distributed teams.

- Usability testing is part of the 3rd quadrant.

- Performance testing is part of the 4th quadrant.

- Release planning is clarification of user stories and ensure that they are testable.

- A selection of users may perform beta testing on the product after the completion of a series of iterations.

- When to stop testing: The achieved test coverage is considered enough. The coverage limit is justified by the complexity of the included functionality, its implementation and the risks involved.

- TDD is a test first approach to develop reusable automated tests.

- TDD cycle is continuously used until the software product is released.

- TDD helps to document the code for future maintenance efforts.

- Static Analysis, Integration Tests and System Tests are in Q1.

- Some tests and Testing in Production are in Q3.

- Non-functional tests are in Q4.

- Reliability and Recoverability are in Q4.

- Blackbox testing refers to a documentation error guessing does not.

- Metric based technique uses calculations from historical data, while expert-based approach relies on team wisdom.

- The final stage of defect tracking is no bug fixing.

- Defects may be raised against documentation.

- A fault need not affect the reliability of a system.

- In test implementation phase: Verifying that the test environment has been set up correctly.

- Reason for testing: To build confidence in the application.

- Testing is a form of quality control which is a part of quality assurance.

- Valid approach to component testing:

- Functional testing of the component in isolation.

- White box-based testing of the code without recording defects.

- Automated tests are run until the component passes.

- Organizational success factor for review: Participants have adequate time to prepare.

- White box testing techniques are used to both measure coverage and to design tests to increase coverage.

- Experience based techniques differ from black box techniques: They depend on individuals personal view rather than on a documented record of what the system should do.

- Software is expected to be delivered at the end of iteration that will provide value to the customer.

- For continuous integration, unit testing and integration testing are needed.

- Acceptance tests are often configured into the continuous integration process and start automatically when the build is deployed to the test system.

- Exploratory testing is preparing to explore ways in which the software might fail.

- Teams may find it challenging to continuously integrate new features to the previously developed features and test the system as a whole if features are very different from each other.

- Defining how the team designs, writes, and stores test cases should occur during release planning.

- Release planning activities:

- Defining testable user stories including acceptance criteria.

- Defining the necessary test levels.

- Participating in quality risk analysis.

- Internal alpha tests and external beta tests may occur either at the close of each iteration, after the completion of each iteration or after a series of iterations. But not during the iteration.

- Actively acquiring information from stakeholders is primarily a tester’s task.

- Testers are responsible for understanding, implementing, and updating test strategy.

- During sprint zero:

- Identify the scope of the project.

- Plan, acquire and install needed tools.

- Create an initial strategy for all test levels.

- Specify the definition of done.

- Quality analysis takes place in Release Planning and Iteration Planning.

- In the iteration planning user stories are broken down into tasks.

- Product backlog is defined and redefined in release planning.

- 3C Concept: Card-Conversation-Confirmation

- Confirmation is the acceptance criteria, discussed in the conversation during user story creation, are used to confirm that the story is done.

- Test levels are test activities that are logically related often by the maturity or completeness of the item under test.

- Acceptance test driven development is a collaborative approach that allows every stakeholder to understand how the software component has to behave and what the developers, testers, business representatives need to do to ensure that behavior.

- Behavior driven development allows a developer to focus on testing the code based on the expected behavior of the software.

- INVEST for user story: Independent Negotiable Valuable Estimable Small Testable

- User stories are written to capture requirements from the perspectives of developers, testers, and business representatives.

- Quality plans are test related work products.

- Build verification tests are initial, subset of automated tests to cover critical system functionality and integration points should be created immediately after a new build is deployed into test environment.

- The test pyramid concept is based on early testing principle of QA and testing eliminating defects as early as possible.

- Tester’s role:

- Testers collaborate with business representatives to help them create suitable acceptance tests.

- Testers work with developers to agree on the test strategy.

- Testers decide on the test automation process.

- Release plan is updated either after each iteration or at worst after every few iterations.

- Use of test automation at all test levels allows Agile teams to provide rapid feedback on quality.

- Q2 system level, business facing and confirms product behavior. This quadrant contains functional tests, examples, story tests, simulations.

- Understand and plan for automating user stories and support various levels of testing is done in iteration planning.

- Feature verification testing, which is often automated, may be done by developers or testers and involves testing against user acceptance criteria.

- Feature validation testing, which is usually manual and can involve developers, testers and business stakeholders working collaboratively to determine whether the feature is fit for use, to improve visibility of the progress made, and to receive real feedback from the business stakeholders.

- Exploratory testing is important in Agile projects due to the limited time available for test analysis.

- During exploratory testing, the results of the most recent tests guide the next tests.

- Definition of Done:

- The defect density

- The defect intensity

- The estimated number of remaining defects are within acceptable limits.

- The consequences of unresolved and remaining defects (e.g., Severity and priority) are understood and acceptable.

- Build verification tests run immediately after a new build is deployed into the test environment.

- Automated acceptance tests:

- Are run regularly as part of the continuous integration of full system build.

- Run against a complete system build at least daily.

- Test results from automated acceptance tests provide feedback on quality of product with respect to regression since the last build.

- Automated acceptance tests are generally not run with each code check-in as they take longer to run than automated unit tests and could slow down code check.

- On unit test static analysis is performed on code.

- Q2 is useful when creating automated regression tests. It contains functional tests, examples, story tests, user experience prototypes. These tests check acceptance criteria either manual or automated.

- Acceptance criteria of a user story cannot be the skillset of a team member or the time limit to test any particular user story.

- During release planning tester supports the clarification of the user stories and ensures that they are testable.

- During iteration planning the tester produces a list of acceptance tests for user stories and helps to break down user stories into smaller and detailed tasks and estimates testing tasks generated by new features planned.

- Test level entry and exit criteria are more closely associated with traditional lifecycles.

- Automation is essential with Agile projects so that teams maintain or increase their velocity to ensure that code changes do not break software build.

- The result of BDD are test classes used by the developer to develop test cases.

- TDD helps to document the code for future maintenance effort.

- CRC card in XP: Class Responsibility Collaboration

- Acceptance level tests: workflow tests UI tests by testers

- System level tests: Business rules by API testing

- Integration level tests: Functional tests by developer or tester

- Sprint Zero:

- Identify scope of the project.

- Create an initial system architecture and high-level prototype.

- Plan, acquire and install any needed tools.

- Create an initial test strategy for all test levels.

- Perform an initial quality risk analysis.

- Define test metrics.

- Specify definition of done.

- Create the task board.

- Define when to continue or stop testing before delivering the product (test criteria)

- If a time limit is reached, the biggest and riskiest estimation is selected.

- Exploratory Testing = Dynamic Testing = Heuristic Testing

- Exploratory testing is Q3 testing that focuses on validation from customer and user perspective, not requirement.

- Exploratory testing:

- One or more testing conditions define the scope of charter.

- Relative priority helps people to decide the order in which to run the exploratory tests.

- Pre-loaded test data files can make the tests more efficient.

- Test basis documents such as requirements and user stories give test ideas.

- Test oracle allows determination of expected results.

- Test steps and expected results do not belong to the test charter as these constrain the test and make it not exploratory but rather scripted.

- Test condition, priority, test data files, test basis and test oracle should be included in exploratory test.

- Scrum provides a framework for organizing teams and managing projects.

- XP proposes development practices, but Scrum does not.

- Story board or task board provides a full view of all user stories and tasks and provide a clear indication of project testing status against delivered functionality.

- Burndown charts do not provide the granularity needed to provide an overview of the project testing status.

- The responsibility of the quality of user stories is shared between all team members.

- Tester’s coach other team members about the test and ensure that all tests are executed. Also, they are responsible for ensuring that test tools are utilized correctly by the team.

- Quality characteristics is how the system performs the specific behavior. This characteristic may also be referred to as quality attributes or non-functional requirements.

- Acceptance test should not make addition to the user story.

- Test charter activities should be defined before starting exploratory testing session.

- Exploratory testing is not an ad-hoc testing exercise.

- Exploratory testing involves parallel test design and execution.